Constructing AI functions is tough. Placing them to make use of throughout a enterprise may be even tougher.

Lower than one-third of enterprises which have begun adopting AI even have it in manufacturing, in line with a latest IDC survey.

Companies usually notice the complete complexity of operationalizing AI simply previous to launching an software. Issues found so late can appear insurmountable, so the deployment effort is usually stalled and forgotten.

To assist enterprises get AI deployments throughout the end line, greater than 100 machine studying operations (MLOps) software program suppliers are working with NVIDIA. These MLOps pioneers present a broad array of options to help companies in optimizing their AI workflows for each present operational pipelines and ones constructed from scratch.

Many NVIDIA MLOps and AI platform ecosystem companions in addition to DGX-Prepared Software program companions, together with Canonical, ClearML, Dataiku, Domino Information Lab, Run:ai and Weights & Biases, are constructing options that combine with NVIDIA-accelerated infrastructure and software program to fulfill the wants of enterprises operationalizing AI.

NVIDIA cloud service supplier companions Amazon Net Providers, Google Cloud, Azure, Oracle Cloud in addition to different companions across the globe, similar to Alibaba Cloud, additionally present MLOps options to streamline AI deployments.

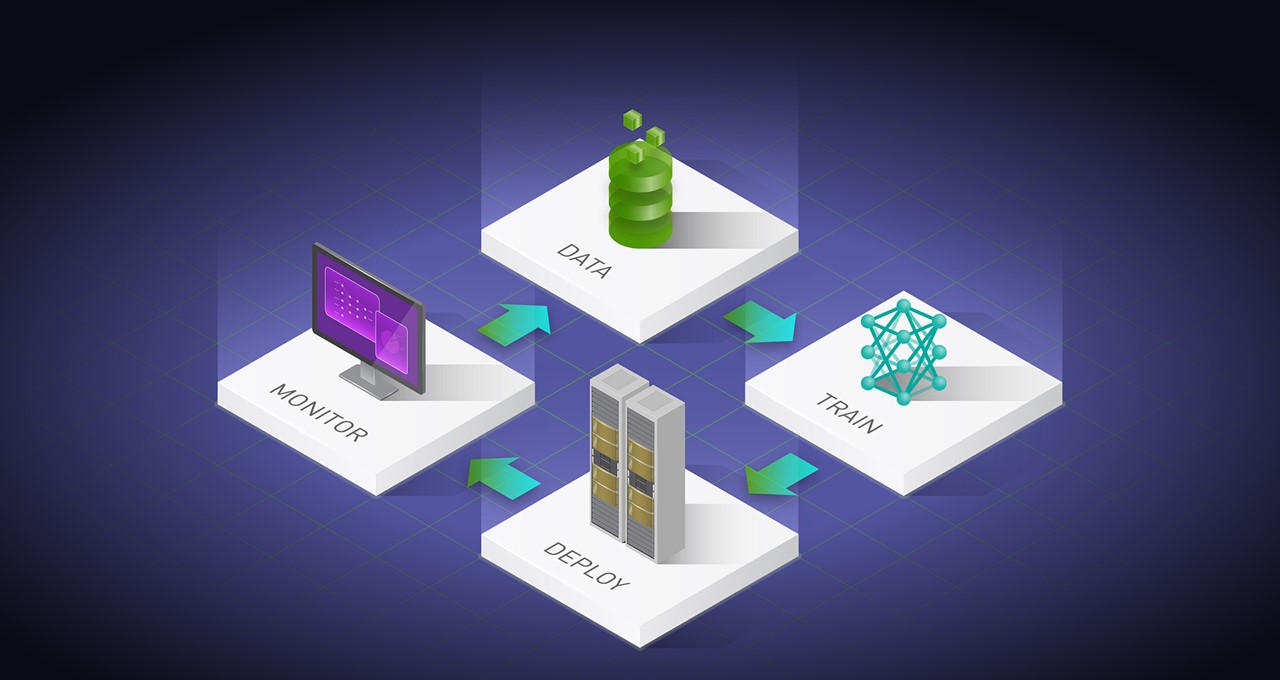

NVIDIA’s main MLOps software program companions are verified and licensed to be used with the NVIDIA AI Enterprise software program suite, which supplies an end-to-end platform for creating and accelerating manufacturing AI. Paired with NVIDIA AI Enterprise, the instruments from NVIDIA’s MLOps companions assist companies develop and deploy AI efficiently.

Enterprises can get AI up and working with assist from these and different NVIDIA MLOps and AI platform companions:

- Canonical: Goals to speed up at-scale AI deployments whereas making open supply accessible for AI growth. Canonical introduced that Charmed Kubeflow is now licensed as a part of the DGX-Prepared Software program program, each on single-node and multi-node deployments of NVIDIA DGX programs. Designed to automate machine studying workflows, Charmed Kubeflow creates a dependable software layer the place fashions may be moved to manufacturing.

- ClearML: Delivers a unified, open-source platform for steady machine studying — from experiment administration and orchestration to elevated efficiency and ML manufacturing — trusted by groups at 1,300 enterprises worldwide. With ClearML, enterprises can orchestrate and schedule jobs on personalised compute material. Whether or not on premises or within the cloud, companies can take pleasure in enhanced visibility over infrastructure utilization whereas decreasing compute, {hardware} and useful resource spend to optimize price and efficiency. Now licensed to run NVIDIA AI Enterprise, ClearML’s MLOps platform is extra environment friendly throughout workflows, enabling larger optimization for GPU energy.

- Dataiku: Because the platform for On a regular basis AI, Dataiku allows information and area specialists to work collectively to construct AI into their every day operations. Dataiku is now licensed as a part of the NVIDIA DGX-Prepared Software program program, which permits enterprises to confidently use Dataiku’s MLOps capabilities together with NVIDIA DGX AI supercomputers.

- Domino Information Lab: Presents a single pane of glass that allows the world’s most refined corporations to run information science and machine studying workloads in any compute cluster — in any cloud or on premises in all areas. Domino Cloud, a brand new absolutely managed MLOps platform-as-a-service, is now out there for quick and straightforward information science at scale. Licensed to run on NVIDIA AI Enterprise final 12 months, Domino Information Lab’s platform mitigates deployment dangers and ensures dependable, high-performance integration with NVIDIA AI.

- Run:ai: Capabilities as a foundational layer inside enterprises’ MLOps and AI Infrastructure stacks by way of its AI computing platform, Atlas. The platform’s automated useful resource administration capabilities enable organizations to correctly align assets throughout totally different MLOps platforms and instruments working on high of Run:ai Atlas. Licensed to supply NVIDIA AI Enterprise, Run:ai can be absolutely integrating NVIDIA Triton Inference Server, maximizing the utilization and worth of GPUs in AI-powered environments.

- Weights & Biases (W&B): Helps machine studying groups construct higher fashions, sooner. With just some strains of code, practitioners can immediately debug, examine and reproduce their fashions — all whereas collaborating with their teammates. W&B is trusted by greater than 500,000 machine studying practitioners from main corporations and analysis organizations all over the world. Now validated to supply NVIDIA AI Enterprise, W&B seems to speed up deep studying workloads throughout pc imaginative and prescient, pure language processing and generative AI.

NVIDIA cloud service supplier companions have built-in MLOps into their platforms that present NVIDIA accelerated computing and software program for information processing, wrangling, coaching and inference:

- Amazon Net Providers: Amazon SageMaker for MLOps helps builders automate and standardize processes all through the machine studying lifecycle, utilizing NVIDIA accelerated computing. This will increase productiveness by coaching, testing, troubleshooting, deploying and governing ML fashions.

- Google Cloud: Vertex AI is a totally managed ML platform that helps fast-track ML deployments by bringing collectively a broad set of purpose-built capabilities. Vertex AI’s end-to-end MLOps capabilities make it simpler to coach, orchestrate, deploy and handle ML at scale, utilizing NVIDIA GPUs optimized for all kinds of AI workloads. Vertex AI additionally helps modern options such because the NVIDIA Merlin framework, which maximizes efficiency and simplifies mannequin deployment at scale. Google Cloud and NVIDIA collaborated so as to add Triton Inference Server as a backend on Vertex AI Prediction, Google Cloud’s absolutely managed model-serving platform.

- Azure: The Azure Machine Studying cloud platform is accelerated by NVIDIA and unifies ML mannequin growth and operations (DevOps). It applies DevOps rules and practices — like steady integration, supply and deployment — to the machine studying course of, with the objective of rushing experimentation, growth and deployment of Azure machine studying fashions into manufacturing. It supplies high quality assurance by way of built-in accountable AI instruments to assist ML professionals develop truthful, explainable and accountable fashions.

- Oracle Cloud: Oracle Cloud Infrastructure (OCI) AI Providers is a set of companies with prebuilt machine studying fashions that make it simpler for builders to use NVIDIA-accelerated AI to functions and enterprise operations. Groups inside a company can reuse the fashions, datasets and information labels throughout companies. OCI AI Providers makes it doable for builders to simply add machine studying to apps with out slowing down software growth.

- Alibaba Cloud: Alibaba Cloud Machine Studying Platform for AI supplies an all-in-one machine studying service that includes low consumer technical abilities necessities, however with excessive efficiency outcomes. Accelerated by NVIDIA, the Alibaba Cloud platform allows enterprises to shortly set up and deploy machine studying experiments to realize enterprise targets.

Study extra about NVIDIA MLOps companions and their work at NVIDIA GTC, a worldwide convention for the period of AI and the metaverse, working on-line by way of Thursday, March 23.

Watch NVIDIA founder and CEO Jensen Huang’s GTC keynote in replay: